The Importance of AI Assurance: Ensuring Trustworthy AI Systems

Artificial Intelligence (AI) and Machine Learning (ML) are transforming industries at unprecedented speed. From predictive analytics and customer personalisation to automation and strategic decision-making, AI is no longer experimental; it is mission-critical.

However, as organisations embed AI deeper into their operations, the risks grow alongside the rewards. Bias, model drift, data privacy concerns, regulatory scrutiny, and reputational damage are no longer hypothetical threats, they are real business risks.

This is where AI assurance becomes essential!

AI assurance provides the governance, validation, and oversight needed to ensure AI systems are reliable, ethical, compliant, and aligned with strategic business objectives.

What is AI Assurance?

AI assurance is a structured framework of processes and controls designed to validate, monitor, and govern AI systems throughout their lifecycle, from development and deployment to ongoing operation.

It includes:

- Model performance validation

- Risk assessment and mitigation

- Bias detection and fairness evaluation

- Ethical AI governance

- Regulatory compliance checks

- Continuous monitoring and model recalibration

AI assurance shifts AI from being a technical experiment to being a controlled, accountable business asset.

Why AI Assurance is Critical for Modern Organisations

1. Risk Mitigation in Complex Systems

AI systems are inherently probabilistic and often operate in dynamic environments. Without oversight, they can:

- Produce biased or discriminatory outcomes

- Drift from intended performance due to changing data

- Expose sensitive information

- Make decisions that conflict with business policies

AI assurance introduces structured validation and monitoring processes that reduce operational, reputational, and financial risks.

2. Ethical and Responsible AI Deployment

As AI systems increasingly influence hiring, lending, healthcare, and public services, ethical responsibility is paramount.

AI assurance frameworks promote:

- Fairness and non-discrimination

- Transparency and explainability

- Accountability in automated decision-making

- Human oversight in high-risk systems

By embedding ethical considerations into AI governance, organisations protect both stakeholders and brand integrity

3. Regulatory Compliance and Emerging AI Laws (Australia)

Australia is moving toward stronger AI regulation, guided by the Voluntary AI Safety Standard (VAISS) and existing mandatory laws. While the VAISS guardrails are not yet compulsory, they signal the direction of future regulation and outline expectations around governance, testing, transparency, and risk management.

Organisations must already comply with mandatory frameworks such as the Privacy Act, Australian Consumer Law, and sector‑specific rules (e.g., APRA CPS 234 for financial services, TGA requirements for AI‑enabled medical devices). These laws require responsible data handling, transparency, fairness, and accountability in automated decision‑making.

AI assurance helps organisations demonstrate compliance with both current legal obligations and emerging expectations, including:

• Model transparency and documentation

• Risk classification aligned to VAISS guardrails

• Bias testing and monitoring

• Clear governance and accountability structures

This positions organisations to meet today’s requirements while preparing for future mandatory AI regulation in Australia.

4. Sustaining Operational Reliability Over Time

AI models degrade if left unchecked. Changes in user behaviour, market conditions, or input data can cause performance drift.

AI assurance ensures:

- Continuous performance monitoring

- Model recalibration and retraining

- Data quality validation

- Alignment with business KPIs

This transforms AI systems from static models into managed, living systems.

5. Strengthening Stakeholder Confidence

Trust determines AI adoption.

Executives need confidence in decision-support systems. Customers need assurance that AI-driven processes are fair. Regulators require accountability.

By implementing AI assurance practices, organisations demonstrate maturity, responsibility, and long-term commitment to trustworthy AI.

The Expanding Scope of AI Assurance: From Governance to Competitive Advantage

AI assurance is not just about risk reduction; it is a strategic differentiator.

Organisations that operationalise AI governance effectively can:

- Accelerate AI deployment with confidence

- Improve decision quality

- Enhance brand reputation

- Attract enterprise customers who demand responsible AI

- Build scalable, audit-ready AI systems

Responsible AI is increasingly becoming a competitive advantage.

How Validation Driven Machine Learning (VDML) Enhances AI Assurance

Validation Driven Machine Learning (VDML), developed by KJR, is a structured methodology that strengthens AI assurance by embedding validation at the core of the ML lifecycle.

VDML emphasises:

- Understanding contextual limitations of ML models

- Iterative validation across development stages

- Integration with ModelOps and DataOps practices

- Governance of model behaviour under real-world conditions

- Clear alignment between model outputs and business requirements

Rather than treating testing as a final step, VDML embeds validation as a continuous discipline, ensuring models remain reliable, explainable, and fit for purpose.

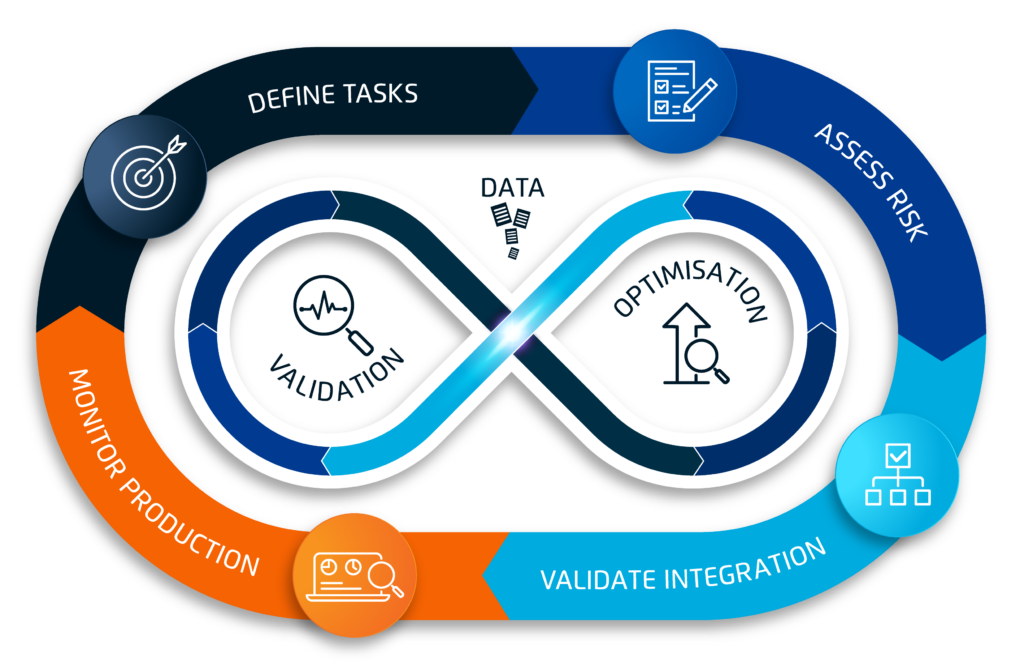

AI Assurance in Practice: A Lifecycle Perspective

Effective AI assurance spans multiple stages:

Define Task

By clearly articulating the intended benefits and purpose of the AI‑enabled system, stakeholders gain a shared understanding of the context in which it will operate. This establishes the baseline needed to assess potential risks.

Assess Risk

Using the defined task – along with any relevant legislation, standards, and industry compliance requirements – a structured risk assessment helps determine the level of governance, oversight, and assurance practices required.

Resolve Limitations

Through the use of realistic test datasets and detailed error analysis, KJR helps organisations uncover hidden faults, performance gaps, and model limitations, enabling appropriate risk mitigations to be put in place.

Validate Integration

Machine learning components must be embedded within a broader service delivery pipeline that interacts correctly with organisational data, systems, and users. Ensuring this integration is robust is essential for safe and reliable operation.

Monitor Production

By supporting clients to track the performance, drift, and integrity of their AI‑enabled systems throughout deployment and operation, KJR helps implement the practical governance controls identified during the risk assessment.

This lifecycle approach ensures AI systems remain trustworthy long after deployment.

Discover More: AI Podcast Series

For technology leaders, AI practitioners, and governance professionals seeking deeper insights, KJR’s podcast series “Trusted AI Adoption | From Hype to Impact” explores:

- Real-world AI testing challenges

- Case studies in ML validation

- Governance frameworks in practice

- Practical strategies for strengthening AI reliability

Tune in to “From Hype to Impact” here:

AI Implementation Workshops

KJR offers practical AI implementation workshops designed for:

- Executives

- Business leaders

- Risk and compliance professionals

- Technical teams

These workshops combine strategic understanding with operational insights, equipping organisations to deploy AI responsibly and ethically.

Final Thoughts: Trust is the Future of AI

AI’s transformative power is undeniable, but without assurance, it can become a liability rather than an asset.

Organisations that embed AI assurance into their strategy will not only mitigate risk but unlock sustainable innovation. Trustworthy AI is not optional; it is foundational to long-term success.