Category | Article

Trusted AI adoption goes beyond ethical principles, it requires evidence‑based testing, validation, and continuous assurance. As AI systems shape decisions across Australian organisations, quality engineering and structured assurance become essential to ensuring fairness, reliability, and trust at scale.

Businesses are increasingly relying on AI and ML technologies to drive innovation, enhance operational efficiency, and gain a competitive edge. However, the deployment of AI systems is fraught with challenges and risks that can undermine their reliability and trustworthiness. This is where AI assurance comes into play.

Use theLegacy systems in 2026 pose real delivery and security risks. Technology consulting helps Australian organisations modernise safely by improving quality, strengthening resilience, and enabling faster, more predictable change.

Legacy systems have become a major delivery and security risk for Australian organisations in 2026. Technology consulting provides the structure, assurance, and engineering discipline needed to modernise safely, improving resilience, reducing defects, and enabling faster, more predictable change.

Australian organisations need both manual testing and test automation to manage growing digital risk. Manual testing provides human insight for exploratory and usability checks, while Test Automation Services deliver speed and consistency for regression and CI/CD. The strongest results come from combining both approaches strategically.

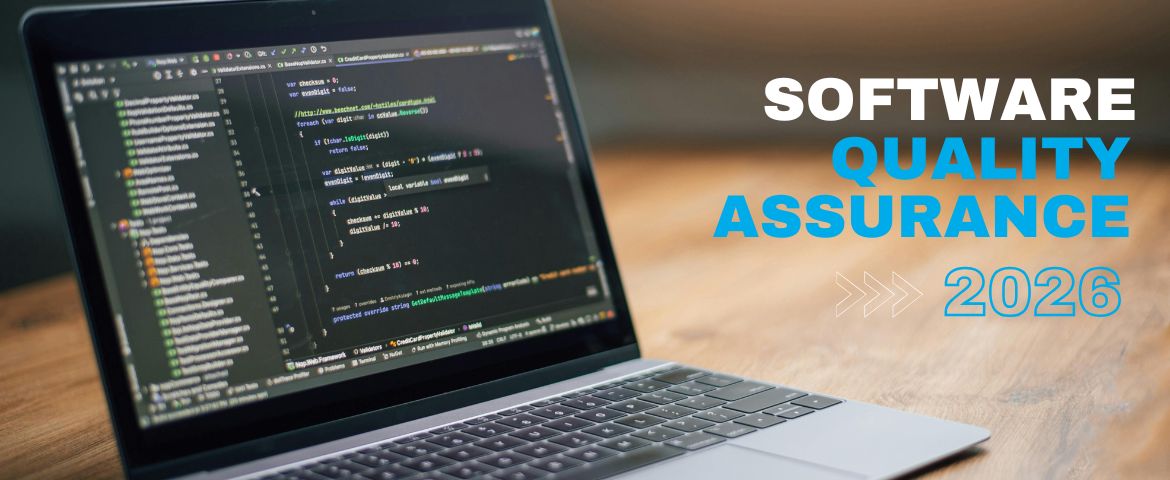

Why Modern Enterprises Still Fail at Software Quality (And How Professional Testing Services Fix It)

Many enterprises still suffer outages, integration issues, and compliance risks because testing is late, fragmented, or undervalued. Independent Software Testing Services provide the structure, assurance, and risk-based discipline needed to deliver reliable, compliant, and production-ready systems.

Software Quality Assurance is about trust and resilience, not just bugs. Discover why SQA matters in 2026 and how to apply it at scale.

Precision, resilience and trust under pressure, these are the qualities that define both KJR and elite ski cross athlete Euan Currie. For the second year running, KJR is proud to sponsor Euan as he competes on the world stage. Read more about Euan.

Australia’s new AI guidance makes an AI governance framework essential. Learn how accountability, testing and human oversight drive trusted AI.

UseAI systems behave differently from traditional software, which makes testing essential for safety in complex environments. This article explores why AI testing provides the evidence leaders need to make confident risk decisions, and how emerging standards emphasise data quality, model behaviour, and ongoing assurance. the meta description

Discover how responsible AI adoption starts with hands-on AI leadership. Learn practical steps, risks, and strategies discussed in KJR’s Trusted AI podcast.

As AI adoption accelerates across government and industry, recent incidents have exposed a critical truth: most AI failures aren’t caused by the technology itself.